The much-anticipated updates that would enable OpenAI’s popular ChatGPT chatbot to interact with photos and sounds have finally been released. This launch is a significant step towards OpenAI’s ambition for artificial general intelligence that is capable of seeing and processing information from a variety of media in addition to text.

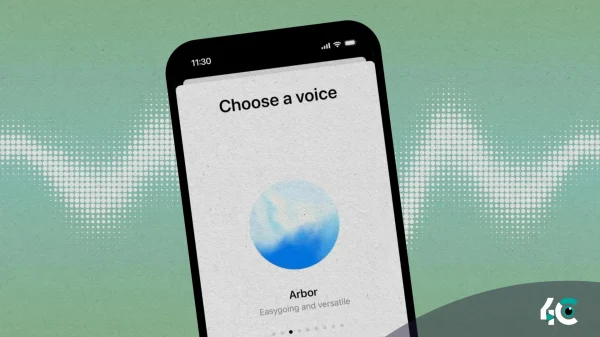

We are beginning to roll out new voice and image capabilities in ChatGPT. They offer a new, more intuitive type of interface by allowing you to have a voice conversation or show ChatGPT what you’re talking about.

OpenAI said in its official blog post

Users of ChatGPT will have the ability to have discussions with the chatbot after the new modifications that were announced on September 25 are implemented. The models that underpin ChatGPT, GPT-3.5 and GPT-4, can now comprehend spoken inquiries in plain English and react in one of five distinct voices.

ChatGPT can now see, hear, and speak. Rolling out over next two weeks, Plus users will be able to have voice conversations with ChatGPT (iOS & Android) and to include images in conversations (all platforms). https://t.co/uNZjgbR5Bm pic.twitter.com/paG0hMshXb

— OpenAI (@OpenAI) September 25, 2023

Within the next two weeks, access to the improved version of ChatGPT will be made available to Plus and Enterprise customers on mobile platforms. After that, access will be made available to all users, including developers. OpenAI said that their new ChatGPT-Plus would feature voice chat, which will be driven by an innovative text-to-speech model capable of imitating human voices, as well as the ability to debate pictures, which will be made possible owing to integration with the company’s image production models. The new capabilities seem to be a part of something that is known as GPT Vision (or GPT-V, which is sometimes mistaken with a theoretical GPT-5), and they constitute major components of the upgraded multimodal version of GPT-4 that OpenAI announced earlier this year.

The previous version, DALL-E 3, is OpenAI’s most sophisticated text-to-image generator to date, therefore this update arrives directly after it. The DALL-E 3 can make high-fidelity graphics from text prompts while comprehending complicated context and ideas stated in normal language. Early testers referred to it as “insane” because of its quality and accuracy, and they called it such because it can do so. It will be included into ChatGPT Plus, a service available through paid membership.