On August 8, OpenAI released its evaluation of the GPT-4o model, revealing a “medium risk” concerning the model’s potential for influencing political opinions through its generated text. This assessment comes as part of OpenAI’s ongoing efforts to ensure the safety and effectiveness of its artificial intelligence systems.

We tested the GPT-4o model, publicly launched in May, across several risk categories, including cybersecurity, biological threats, persuasion, and model autonomy. The model demonstrated a medium risk in textual persuasion, despite having a low risk in cybersecurity, biological threats, and autonomy—meaning it is unlikely to develop self-updating capabilities or autonomous actions.

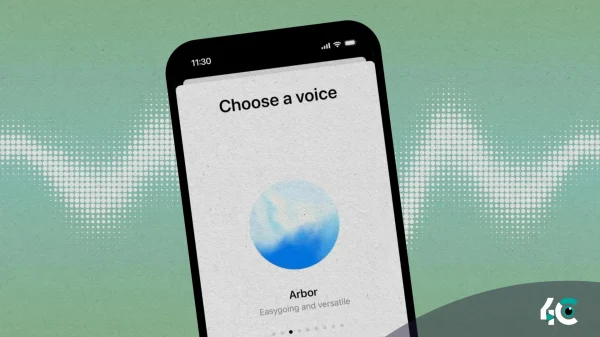

OpenAI’s evaluation found that GPT-4o could occasionally produce text that was more persuasive than human-written content in certain cases, though not consistently. This “medium risk” specifically pertains to the model’s ability to sway political opinions, as tested through various articles and chatbot interactions. We rated the model’s voice capabilities as low risk, identifying no significant concerns.

The company has implemented safeguards to address these potential risks, focusing particularly on its audio features and ensuring that the model’s outputs do not propagate misinformation or unauthorized content. Despite these precautions, OpenAI’s transparency about GPT-4o’s risks comes amid broader calls for more stringent AI regulations and safety measures.